BoxLib AMR

In modern computational studies of combustion systems, the disparity of scales is increasing rapidly as we begin to simulate more realistic experimental configurations. This situation is particulary evident in high pressure systems of practical relevance for advanced low-emissions combustion systems. The Exascale Simulation of Combustion in Turbulence (ExaCT) co-design center, is targeting a structured-grid Adaptive Mesh Refinement (AMR) approach to local refinement to address these difficult nonuniform resolution requirements. Structured-grid AMR offers enormous potential for improving computational efficiency. However, as we move toward calculations at the exascale there are a number of challenges that must be addressed.

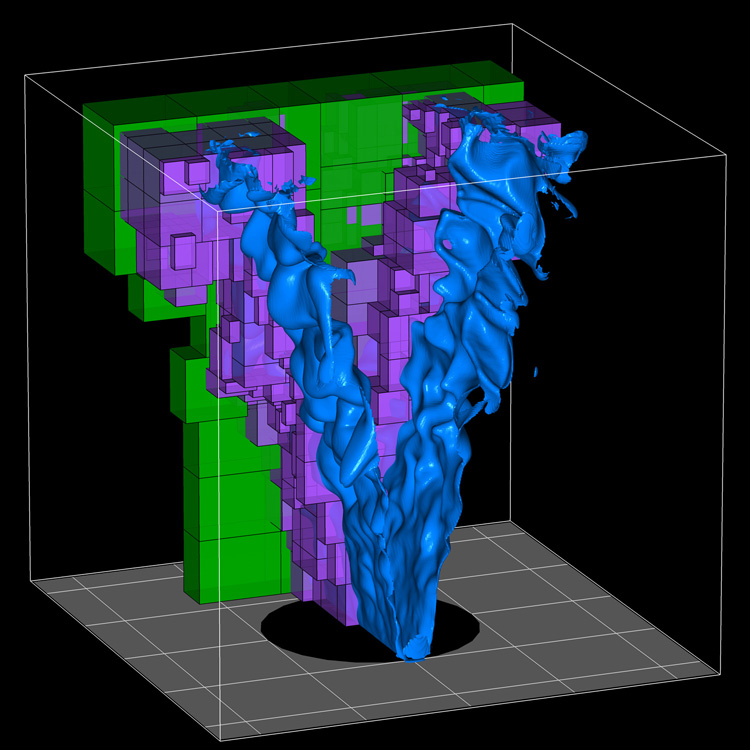

Using a block-structured approach to AMR, regions requiring additional resolution are identified dynamically as the solution evolves and then a collection of boxes are created to cover those regions with the desired level of grid resolution. Each box in this context represents a rectangular region in index space, and a large collection of computational mesh cells to carry the associated state data needed to represent the solution on that region of physical space. A key feature of the block-structured approach is that the boxes represent large, aggregate collections of cells, and thus suggest a natural mechanism for expressing hierarchical parallelism. A two-level paradigm distributes these large patches (and the computational work associated with them) across “nodes” of the machine, while the cores in each node access the data in worker “threads” using memory that is shared across the node. Modern AMR implementations using this approach have scaled effectively to more than 100K cores.

While the two-level approach discussed above might extend to much larger computing hardware systems than even the most modern implementations, the paradigm will not be effective on a machine with significant penalties for nonlocal memory access across and within the nodes. In this case, an approach with a much deeper hierarchy seems more appropriate. For example, we could decompose the domain into regions that are distributed to nodes. However, each of these regions would themselves be represented by a union of patches. Within the node, the patches would be internally mapped to groups of processors within based on the characteristics of the memory and network within the node. The work within each node could then be further decomposed into finer grained pieces to be distributed over cores. Key co-design issues in this setting include how to control the data layout within a node to minimize data movement, and how to schedule the computational work given the characteristics of the communication and compute hardware.

Another issue relevant to AMR is load balancing. When the grids in the hierarchy change through a regridding operation we must dynamically rebalance the computational load. We note that for problems where the underlying physics generates heterogeneity in the work load, dynamical load balancing can also be necessary even for uniform-grid codes. Current practice is to use work estimates to predict the amount of work needed to advance the solution on each grid and use that information to allocate grids to processors. For homogeneous physics, the number of cells in a grid is a good estimate; however, for other processes something more sophisticated is required. For example for detailed chemical kinetics, one can use an estimate of how hard the problem was in the previous time step to estimate the work required for the next time step and use that estimate for load balancing. As we push to the exascale there are several levels of sophistication that can be used to further improve load balancing. One approach is to develop heuristic work estimates, similar to the approach described above for reactions, and use those estimates for load balancing. An alternative is to perform detailed performance modeling of key computational kernels and use those to develop more accurate work estimates. This task could be performed in conjunction with performance tests and autotuning of those kernels. A third alternative would be to develop a statistical model for the work required by a given module and dynamically fit that model on the fly as the execution proceeds. For this latter strategy, we would use performance models as a starting point for gathering statistics. An interesting feature of this approach is that it provides the ability to detect and compensate for differences in hardware performance. A final issue with respect to load balancing is to determine whether the work estimates for disparate processes are sufficiently dissimilar to merit a different data layout for the various components of the model. This issue will need to be addressed by using hardware simulators to estimate the tradeoffs.

For more information, please contact John Bell of Lawrence Berkeley National Laboratory at [email protected].