In Situ Analytics and Visualization

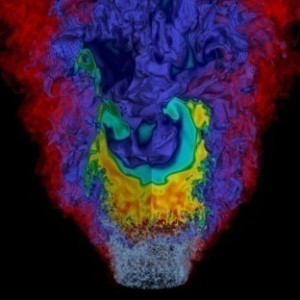

Visualization and analytics are a key part of the scientific process. They provide the qualitative and quantitative summaries of large-scale scientific simulation data that are used to extract fundamental insights. With the onset of exascale computing, the current paradigm of performing a simulation, saving data to disk, and deploying visualization and analysis as a post-process will no longer be feasible due to I/O constraints. The ExaCT center is developing the algorithms and infrastructure necessary to move these computations in situ, minimizing data storage and movement requirements.

Visualization and analytics are a key part of the scientific process. They provide the qualitative and quantitative summaries of large-scale scientific simulation data that are used to extract fundamental insights. With the onset of exascale computing, the current paradigm of performing a simulation, saving data to disk, and deploying visualization and analysis as a post-process will no longer be feasible due to I/O constraints. The ExaCT center is developing the algorithms and infrastructure necessary to move these computations in situ, minimizing data storage and movement requirements.

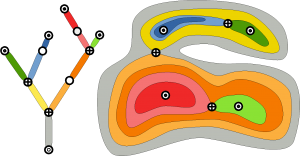

Gleaning insight from scientific data is an interactive process; therefore, we are focusing our efforts on capabilities that reduce data significantly yet still allow for interactive knowledge discovery after the simulation is complete. For example, topology-based data analysis techniques offer a concise multi-scale representation of features in the data and can compress the semantic results of a simulation; narrowing the gap between data size and limited storage. Furthermore, by computing and storing ray attenuation functions, scientists have the flexibility to modify images generated in situ, often used to visually monitor and diagnose simulations on the fly, as well as capture highly transient, intermittent phenomena that occur between time steps saved to disk.

Gleaning insight from scientific data is an interactive process; therefore, we are focusing our efforts on capabilities that reduce data significantly yet still allow for interactive knowledge discovery after the simulation is complete. For example, topology-based data analysis techniques offer a concise multi-scale representation of features in the data and can compress the semantic results of a simulation; narrowing the gap between data size and limited storage. Furthermore, by computing and storing ray attenuation functions, scientists have the flexibility to modify images generated in situ, often used to visually monitor and diagnose simulations on the fly, as well as capture highly transient, intermittent phenomena that occur between time steps saved to disk.

Moving visualization and analytics in situ poses a myriad of challenges that require both evolved state-of-the-art algorithms and introduction to new theoretical foundations. Some of the challenges include the development of distributed graph representations, graph processing and visualization techniques. While  some distributed capabilities do exist, these are not designed for exascale heterogeneous systems with specialized processors, deep memory hierarchies, and high levels of concurrency.

some distributed capabilities do exist, these are not designed for exascale heterogeneous systems with specialized processors, deep memory hierarchies, and high levels of concurrency.

To address these issues, the ExaCT center is exploring solutions to the problem of analytics for exascale combustion simulations at several levels of granularity. At the highest level, development includes skeleton applications to analyze the impact of hardware support and network topologies on algorithm performance. At the next level of granularity, compact applications that represent the key algorithmic components of analysis and visualization modules are being developed. These applications allow stress testing of the design choices at the intra-node and the inter-node level. At the intra-node level, choices of heterogeneous and deep memory hierarchies will impact the performance of highly parallel local computations. At the inter-node level, choices of network communication will impact the scaling of algorithms such as global feature relationship detection. At the finest level of granularity, key kernels that can benefit from hardware support are identified, such as fast sorting kernels, image compositing, and support for integrated volume visualization. As these challenges are addressed, all design decisions are guided by the co-design principles and in working closely with team members in applied math, hardware simulation, programming models, and I/O middleware to achieve these goals.

For more information, please contact Prof. Valerio Pascucci of the University of Utah at [email protected].